When you have a lot of URLs affected by this problem, and you only want to look at some parts of your website, use the pyramid symbol on the right side.īefore you start troubleshooting, consider if the URLs in the list really should be indexed. The list can be filtered by URL or URL path. There you may see the “Improve page appearance” table.Īfter clicking on the status, you will see a list of affected URLs and a chart showing how their number has changed over time.

Robots txt no index how to#

How to fix “Indexed, though blocked by robots.txt?”įirstly, find the “Indexed, though blocked by robots.txt” status at the bottom of the Page Indexing report in your Google Search Console. Let’s say, for example, you’re still working on that page’s content, and it’s not ready for public view.īut if the page gets indexed, users can find it, enter it, and form a negative opinion about your website. If you intentionally used the robots.txt Disallow directive for a given page, you don’t want users to find that page on Google. To see if your page has the same problem and is “Indexed, though blocked by robots.txt,” go to your Google Search Console and check it in the URL Inspection Tool. It only shows the URL and a basic title based on what Google found on the other websites that link to Jamboard. That’s because Google couldn’t crawl it and collect any information to display.

While the page ranks, it’s displayed without any additional information. Google Jamboard is blocked from crawling, but with nearly 20000 links from other websites (according to Ahrefs), Google still indexed it. Here’s an example – one of Google’s own products: Without those elements, users won’t know what to expect after entering the page and may choose competing websites, drastically lowering your CTR.

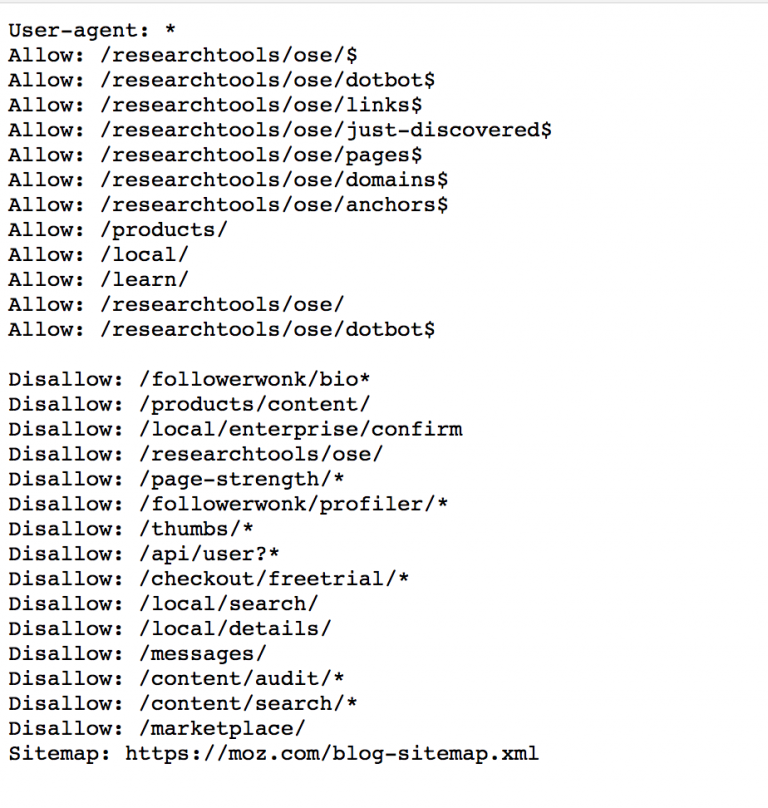

In this scenario, Google is usually motivated by many links leading to the page blocked by robots.txt. Sometimes Google decides to index a discovered page despite being unable to crawl it and understand its content. What causes “Indexed, though blocked by robots.txt” in Google Search Console? Whenever you put a Disallow directive in it, Googlebot knows it cannot visit pages to which this directive applies.įor detailed instructions on modifying and managing the file, see our robots.txt guide. Robots.txt is a file that you can use to control how Googlebot crawls your website.

0 kommentar(er)

0 kommentar(er)